A bug bounty hunter posted an article titled Instagram’s Million Dollar Bug regarding an RCE vuln in Instagram (owned by Facebook). The bug bounty hunter was ultimately able to access all of Instagram’s production data and assets (private SSL keys, API keys, etc.). This post will not discuss anything related to bug bounties, as enough ink has been spilt on that quarrel. Instead this post will use what was written in that write-up as a case study in the steps used by an attacker and the mitigations Instagram and other businesses can implement to pro-actively detect such weaknesses in their own infrastructure and to detect, deny, disrupt, degrade, or deceive such attacks.

I have no affiliations with, or inside knowledge of, Instagram or Facebook and am basing this post purely on the write-up by the bug bounty hunter. Further, from what I do know, Facebook has a solid security team, so I’m not trying to give them advice.

My goal is to give a different view of the issues identified than what the bug bounty hunter focused on, since the original write-up focused more on the quick fixes to secure things, whereas I am more interested in the strategic changes that should take place to prevent these problems in the future. I’m also interested in how to detect such an attack as it moves through different stages. This will be applicable to other companies.

Here are the problems the attacker leveraged that I’ll discuss defense techniques for:

- Internal server exposed to the Internet

- Reliance on third-party authentication

- Unaudited code resulting in unchanged secret token

- Unfettered access once the attacker gained RCE on the server

- Poor secret management

- Privilege escalation by finding more credentials

Problem: Internal server exposed to the Internet

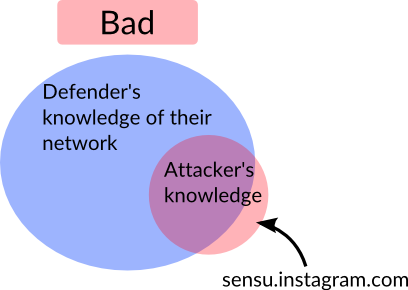

The story begins when the attacker was tipped off to an Internet accessible server (sensu.instagram.com). The attacker did not have to take any of the normal recon steps. Normally an attack would need to try to identify sub-domains and IP addresses owned by the business. To find sub-domains, they can try making a domain transfer (AXFR) request, or brute-forcing them. Both these techniques can be accomplished with Bluto. That tool’s wordlist doesn’t have “sensu” as a possible sub-domain, nor did other tools I looked at. An attacker’s sub-domain wordlist should also contain other likely server names associated with common tools such as “nagios”, “jenkins”, “hudson”, “git”, etc.

An attacker would also want to identify the IP blocks owned by the company, using something like Robtex or Hurricane Electric. Other recon tricks also exist, but the point of this is to show the ways an attacker finds information about a defender’s network, so they can discover mistakes the defender has made.

Once the attacker has a list of potential targets, they will then scan for open services using nmap.

To exist as a business, the world has to know www.instagram.com exists along with a number of sub-domains for various public services. For a defender, it’s okay for the world to know sensu.instagram.com exists, but the world shouldn’t be able to access it. In this case, the defenders likely did not know their private server had become public.

Detection: Finding exposed servers

Unlike the guess work of the attacker, a defender should already know all the domains and sub-domains along with IPs they own. Using this they can then setup a periodic nmap scan from an outside host that would record differences and alert them of changes.

There is still a problem of random stray servers that may end up falling outside of this, so they can query their EC2 instances to identify which have public IP addresses, using this script.

Detection: Detecting firewall policy changes

In addition to getting the external view of the infrastructure via nmap scanning, the company will also want to check internally what changes may have been applied. Changes to AWS, along with some auditing of problems, can be identified with Security Monkey. Amazon also offers CloudTrail to log AWS API calls, but this is more useful as a forensics trail. Defender’s will want to watch changes to their Security Groups and try to monitor what systems are started in those groups. For architectures that spin up servers on-demand, they’ll want to ensure they tag their servers to try to assist with being able to create alerts.

Next they’ll want to have some sort of check on their iptables to ensure that’s not disabled or modified. Security is not just trying to lock things down and find bad guys, it’s also a lot of trying to automate ways of ensuring things you locked down stay locked down and avoiding footguns.

Problem: Reliance on third-party authentication

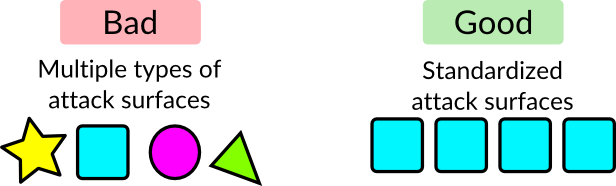

The exposed server was running https://github.com/sensu/sensu-admin which is an admin UI for Sensu, an open-source server monitoring solution that is a more modern alternative to Nagios. This UI “luckily” required authentication. This was both good and bad. It’s good because the exposed server couldn’t be immediately interacted with because it required a username and password, but it’s bad because this authentication feature ultimately led to the RCE. Defenders should try to have an homogeneous attack surfaces so they can focus their effort and ensure one type of surface is as secure as possible, instead of trying to secure many different types of exposed surfaces.

Protection: Use a common authentication service

All of those problems could be avoided by using a standard authentication proxy, such as an nginx reverse proxy for LDAP authentication or bitly has an open-source oath2_proxy. By standardizing on a means of authentication, you can standardize on how you do logging and alerting for it, how to do password resets, disabling accounts, if you want to always use 2-factor or client certs, and other benefits. There are still concerns such as potentially enabling an attacker to pivot through the network more easily if the same password is used for all authentication or the difficulty of restricting access and functionality to different groups. So take these into consideration with how you set this up.

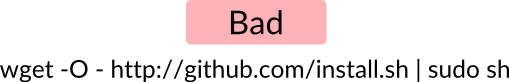

Problem: Unaudited code resulting in unchanged secret token

The attacker was able to use an unchanged secret_token. Knowing a server’s secret_token for verifying session cookies like this usually would allow you to impersonate other users, but Rails takes this one step further by enabling RCE as explained here. It is therefore critical that people change the default secret_token’s if they exist in an app.

Sensu admin now announces itself as being deprecated on it’s github page and this secret_token has been removed from the code base (thanks to Tomek Rabczak from NCC Group). There are unfortunately still hundreds of thousands more repos on github with public (and therefore likely static) secret tokens.

Protection: Audit third party code

This is a nightmare to do thoroughly because so much third party code, libraries, and services exists in networks today. You should still attempt at least an automated review of these apps. There is a list of known secret tokens here and a script to audit servers with them decribed here. Unfortunately that list is incomplete, so someone should do some github grepping to fill it out.

Auditing for problems like this, which could be classified as a misconfiguration, are difficult, especially for uncommon apps. I don’t have access to Nessus or other commercial automated scanners to know how well they work for this.

Problem: Unfettered access once the attacker gained RCE on the server

Once an attacker has RCE, the goal becomes detection and containment. Once an attacker obtains RCE via a service, they likely have all the access that service has, no matter what protections have been put in place. It is therefore important to implement least privilege to services (as seems to have been done by Instagram to some degree) and also detection. You want to know as soon as possible that an attacker has breached your defenses and obtained a beachhead from which they can invade the rest of your network.

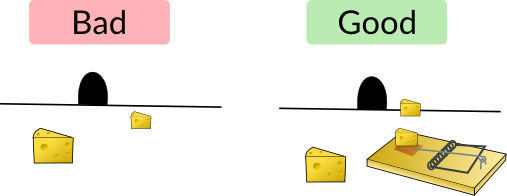

Defenders try to avoid their networks being like M&Ms with a hard, crunchy outside and soft inside, but if you’re going to focus your resources, you’re going to try to secure the outside as much as possible, and this focus will result in leaving the inside somewhat open. It therefore becomes important to detect any attackers as soon as possible once they do get inside the network. Creating alerts can be tedious and prone to false positives, so one solution is to set traps, or deceptive technologies as Gartner calls them. On one side of complexity scale is a honeypot that is (or mimics) an entire vulnerable server. On the other side is a canary token, an interesting concept from Thinkst that they open-sourced. Canary tokens are simple web bugs, so when an attacker reads a page in their browser that contains the canary token, it get’s reported.

In a perfect world, defenders would have these traps set up like land mines, where tripping them would cause the server to be automatically quarantined. This is a scary and potentially difficult thing to do in many cases, but is reasonable for something like Sensu Admin, where quarantining the server would simply mean the ops team wouldn’t have a pretty UI until the incident was resolved. More aggressively, you could have kill switches on features. For example, if you send password reset emails, and a compromise is detected there, then your users might not be able to reset their passwords until this is resolved. Tell your engineers this is like Chaos Monkey, but instead of killing EC2 instances, it kills features.

Detection: auditd

The auditd subsystem is used for monitoring on Linux. It generically allows you to log any system call, but is most effective for security at logging process creation and file access. It can be difficult to create alerts on this data, so it’s more helpful for forensics. However, it can be effective for creating alerts based on traps. For example, you could leave a fake secrets.conf file sitting around and if anything reads it, you could generate an alert. If you don’t want to take the aggressive approach of quarantining the whole system, then as a half-way point, you could use this to turn on more stringent monitoring than you would otherwise (because of performance concerns) or to snapshot an instance.

Detection: Honeypot

Beyond local file system access, if an attacker has RCE, they’re going to want to explore. At this point, a honeypot can be valuable, so you can more easily identify when an attacker is scanning your internal network and attempting to move laterally. There are many open-source projects to do this, such as honeyd, but in general these are built for researching attackers in various ways. They collect malware samples, monitor what an attacker does when they ssh in, and other functionality to study attackers, but businesses are primarily concerned with detection and alerting. Commercial solutions in this area are expanding, with Canary from Thinkst being one.

Problem: Poor secret management

In the Instagram case, once the attacker had RCE on the server, he used it to find /etc/sensu/config.json which had an AWS key pair that gave him access to an S3 bucket. The attacker then downloaded the S3 bucket, and within it was old copies of code. In one of the older versions of the code base was a Vagrant config file that contained a second AWS key pair that then gave him access to seemingly everything. Having that old, stray secret (the AWS key pair) lying around and still usable could have been avoided.

Protection: Keep secrets out of code

You can use git pre-commit-hooks to check the files you are checking in. This can not only detect things like AWS keys and SSH private keys, but is also a good practice because it can catch syntax errors in json files and all sorts of other issues. Some example pre-commit hooks are here which are based on the file name, but for a more strict check you can also regex files for AWS keys using this pre-commit-hook script.

Detection: Look for secrets

Much like the steps for keeping secrets out of code, you can more generally search for secrets on servers using gitrob. This should also be part of the code auditing strategy as this would have found the secret_token.rb file from Sensu Admin.

Protection: Rolling keys periodically

AWS keys and other secrets should be rolled periodically. This is good to not only ensure any stray keys such as Instagram’s archived key are no longer effective, but also helps ensure rolling keys isn’t like rolling dice as to whether or not the site will get knocked out. When talking to developers about pushing code, they advocate that you should be deploying often, in order to recover from problems faster, ensure processes are automated, and other benefits. Security teams should also get comfortable rolling keys for the same reasons (although the frequency doesn’t need to be as aggressive).

Protection: Using a secret management solution

So if you’re not keeping these secrets in your source control, where do you keep them? There are a number of open-source secret management solutions, such as Lyft’s confidant, Mozilla’s sops, and Hashicorp’s Vault. These help to secure access to secrets, create audit logs for that access, and make rolling creds easier.

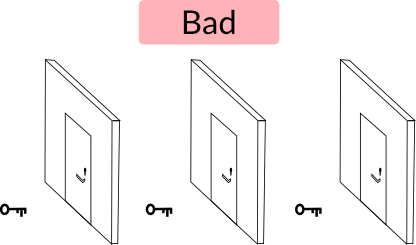

Problem: Privilege escalation by finding more credentials

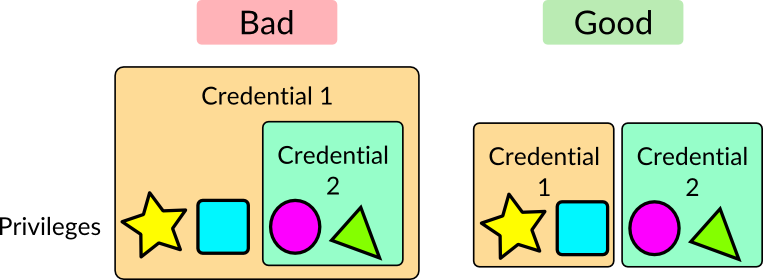

The previous problem allowed the attacker to find additional AWS key pairs because they were stored in data the attacker had access to with the first set of key pairs. The new credentials they found though demonstrated a different problem, which is that credentials were available that were supersets of the previous credentials. Instead of tying the least privileges to each service, it seems there was an AWS key pair that provided god mode on Instagram’s networks and these were not well secured.

Protection: Least privilege

Amazon’s IAM Best Practices advocate least privilege along with other recommendations. Once the secret management solution described above is implemented, it becomes easier to restrict services because having more secrets becomes easier to maintain so you can create more secrets that give fewer privileges, instead of having fewer secrets that grant more privileges.

Conclusion

To restate the introduction, this document isn’t meant to call out Instagram’s security specifically. Simple mistakes can unfortunately topple the strongest structures in infosec. This document took advantage of the thorough notes provided by the attacker. This document sought to point out ways to avoid some of those mistakes, limit their impacts, and identify attackers that attempt to take advantage of those mistakes.