This article should be read in combination with Creating disaster recovery backups which discusses best practices for backups including how to encrypt them properly. For this article, I’ll assume you want to backup your data to Google Cloud. This means you normally run on AWS, Azure, or somewhere else, and want to use Google as your backup location. This article describes how to setup a location for your backups and how to get them there.

Caution: A proper backup system should be write-only, with no abilty to over-write, delete, or read existing files (except by a separate account that performs the disaster recovery). However, the Google Cloud platform is much less mature than other cloud platforms with respect to permissions. These backups do allow the backup account to read the files it has written (although those files should be encrypted in such a way that they provide no value).

Caution: A proper backup system should be write-only, with no abilty to over-write, delete, or read existing files (except by a separate account that performs the disaster recovery). However, the Google Cloud platform is much less mature than other cloud platforms with respect to permissions. These backups do allow the backup account to read the files it has written (although those files should be encrypted in such a way that they provide no value).

I am unable to delete or over-write these backups with the credentials of the backup account, but I fear that could be due to my lack of understanding and not because it's not possible. This hesitation makes this concerning for some disaster scenarios (an attacker that has compromised your backup system), but for most disaster scenarios, this is sufficient. You could also overcome these deficiencies by setting up a process that copies the backups somewhere else and deletes the originals, but that is outside the scope of this article.

This article elaborates on Scott Helme’s article Easy, cheap and secure backup with Google Cloud Platform.

Setup a location to store your backups

Google provides a service called Coldline for low-cost archival storage. Unlike Amazon Glacier, Google Coldline data retrieval is instant. Amazon Glacier on the other hand may take up to 5 hours to start the retrieval. Keep in mind though that if you’re retrieving backups from Google to Amazon, those backups still need to be transported across the Internet, so if you have petabytes, it will still take a while.

Coldline costs $0.007/GB/month for storage. It only a 99% availability SLA, but does have a 99.999999999% durability SLA over a given year. Retrieval costs $0.05/GB. So let’s say every night you backup 100GB of data, and you keep your backups for a year, then after a year your backups will end up costing $3066/year. Then each time you do a recovery (hopefully you’re only testing recoveries), it will cost $5. Of course you could get complicated and only store monthly backups for a year, and nightly backups you only keep around for up to month, or some other scheme to save money. I don’t know what bandwidth in/out costs, if anything.

Setting up Coldline

Register an account with Google at https://cloud.google.com/

You’ll end up tying this back to a Google account. Depending on how paranoid you are, you may want to ensure this account isn’t tied back to your normal work email account.

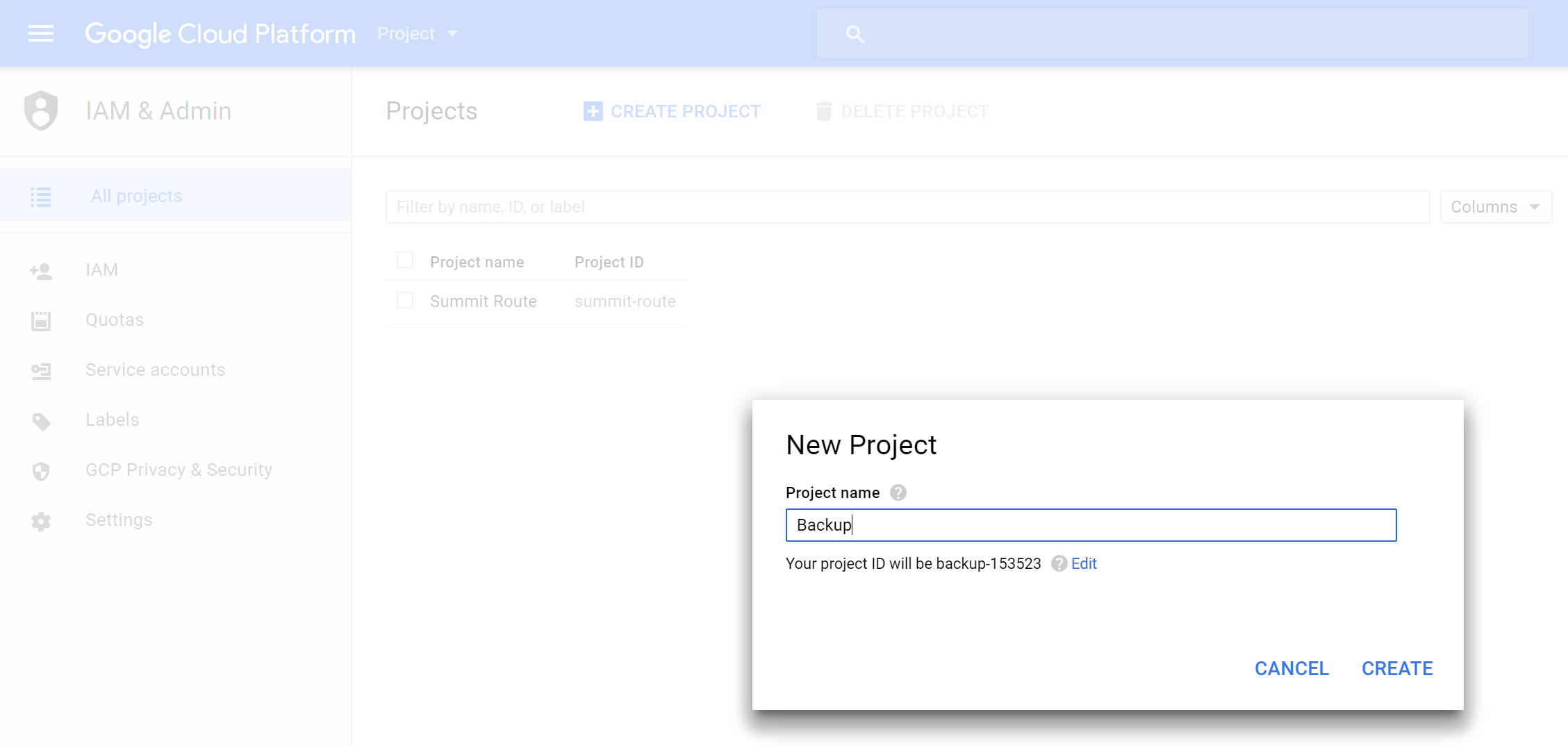

Eventually, you’ll end up at https://console.cloud.google.com and have the opportunity to “Create Project”. I’m naming mine “Backup”.

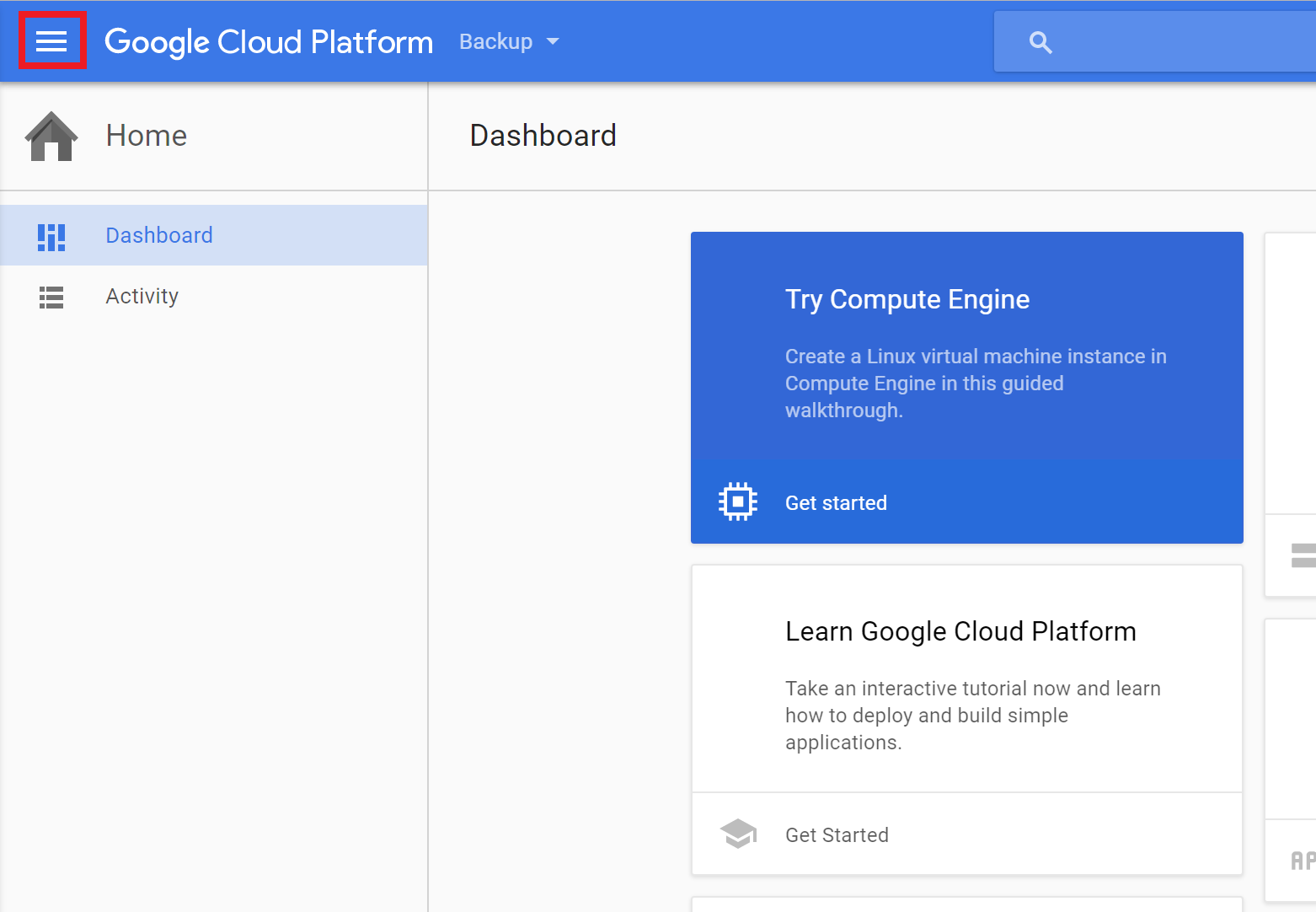

This will take you to a dashboard that is even worse than Amazon’s dashboard, because you will have no idea where the actual services are. They can be accessed by clicking the hamburger in the top left.

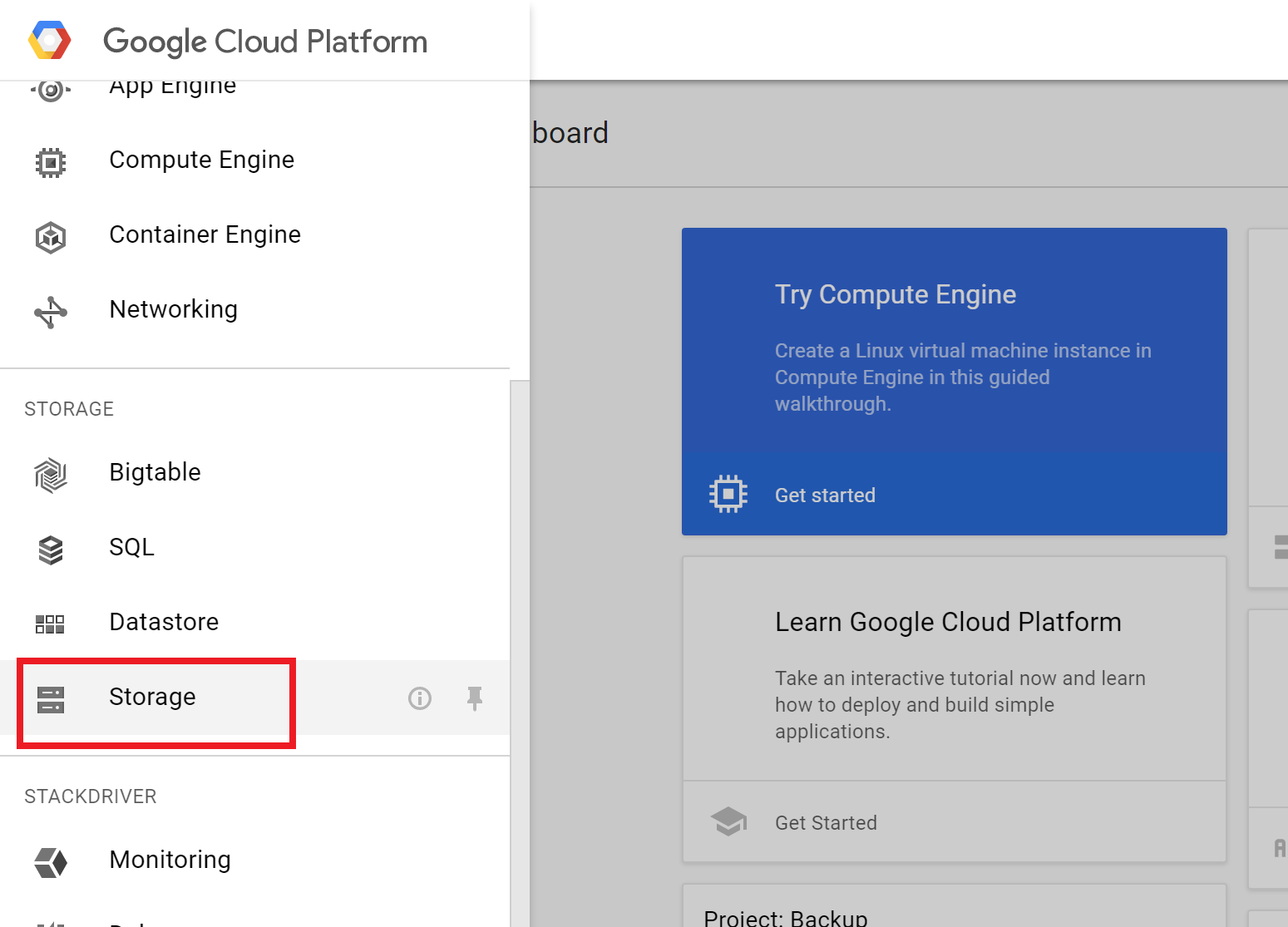

Scroll down and select “Storage”

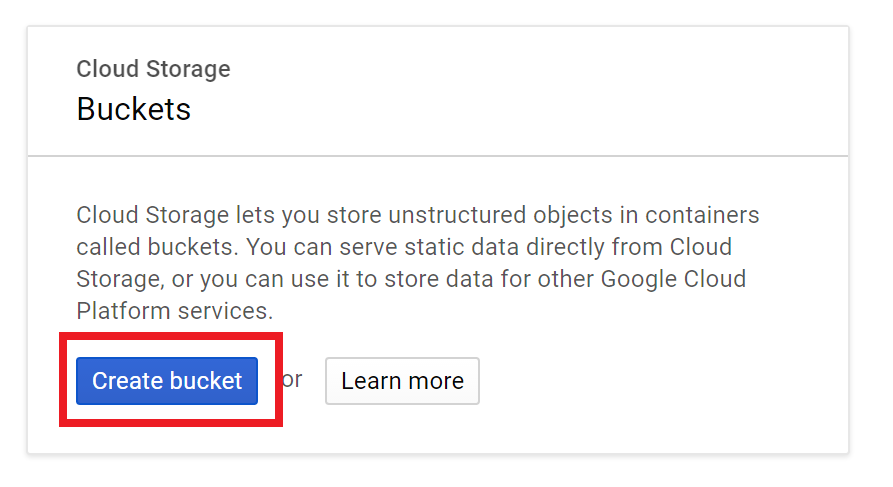

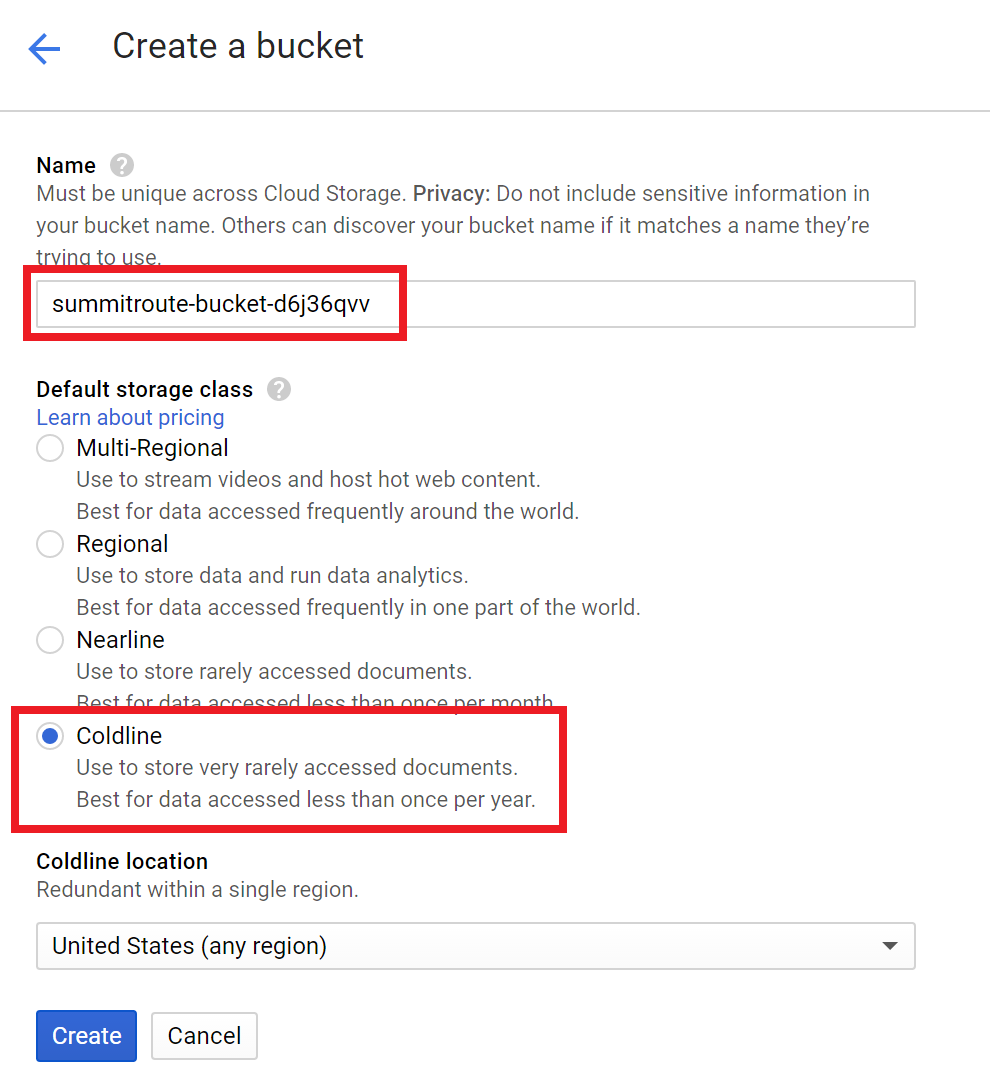

Create your bucket.

Name your bucket and select “Coldline”. Bucket names could be brute-forced. People won’t be able to see inside it, but they’ll know it exists, so you might as well throw some random characters at the end of it, as I’ve done by naming my bucket “summitroute-bucket-d6j36qvv”

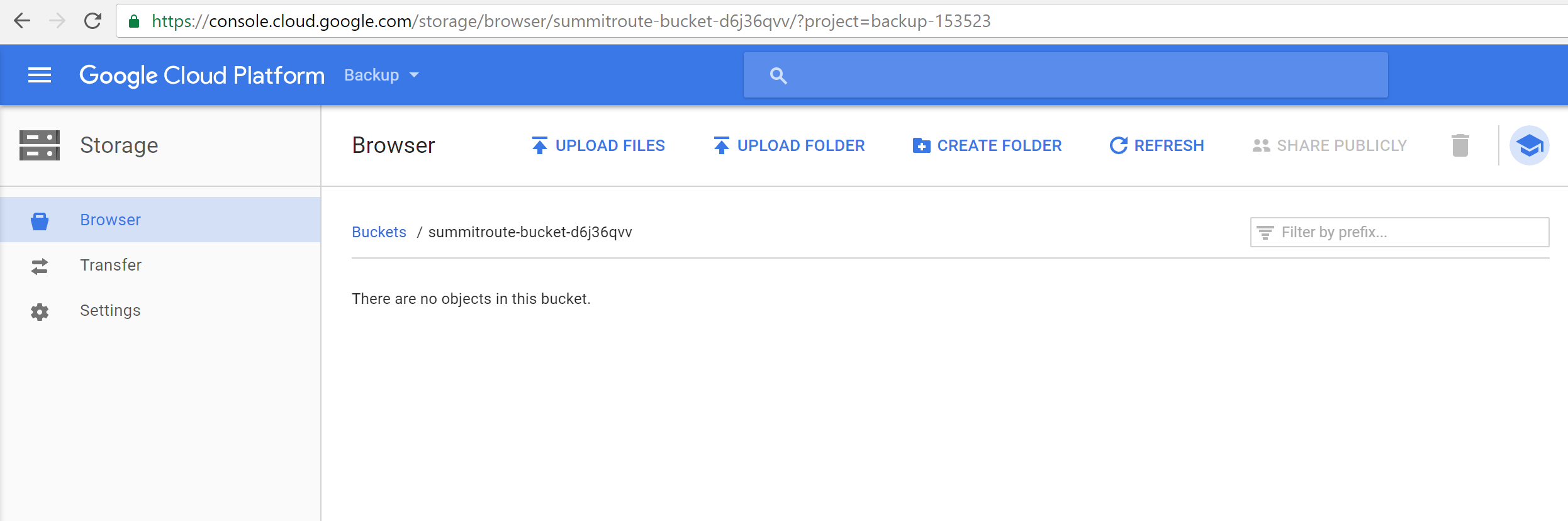

You now have an empty bucket. By default it is private so only you can read and write to it.

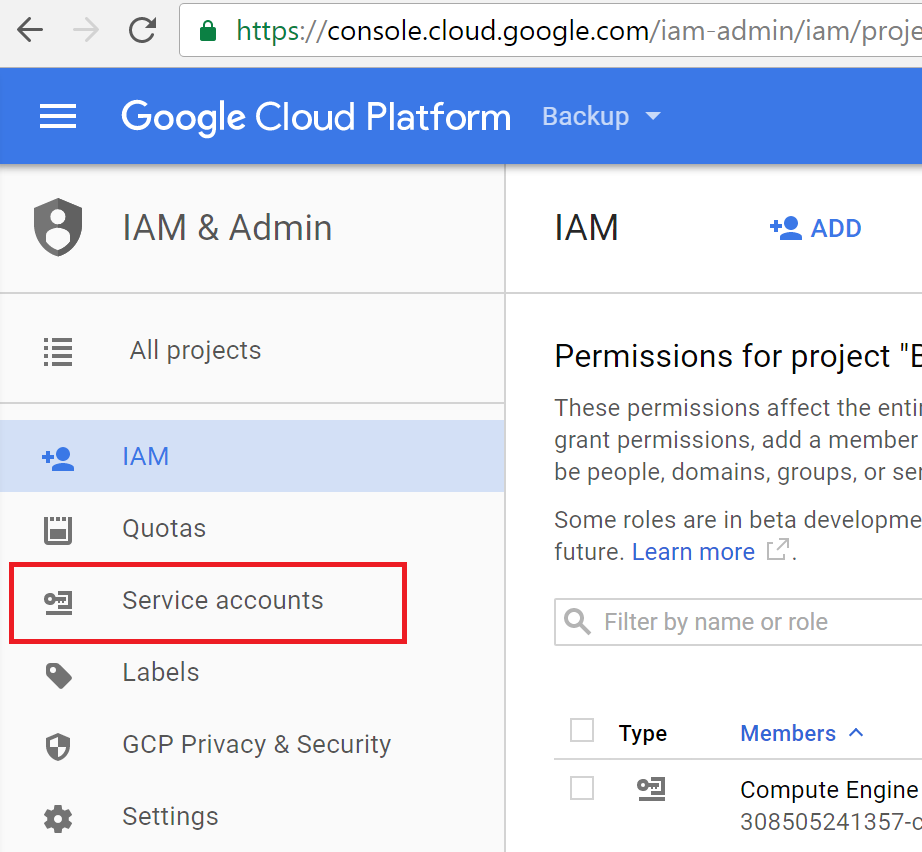

Create a service account

Now that we have a bucket, we need a way of writing to it. As mentioned, I want to create a credential that only provides write access to the bucket, without the ability to overwrite or read any existing files. To do that, we’ll create a “Service account”.

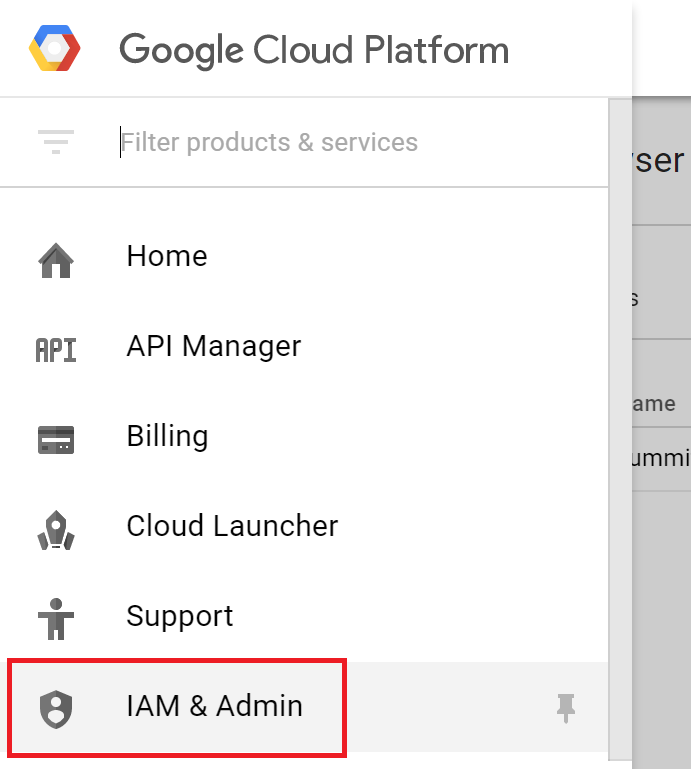

Click on the hamburger again and select “IAM & Admin”

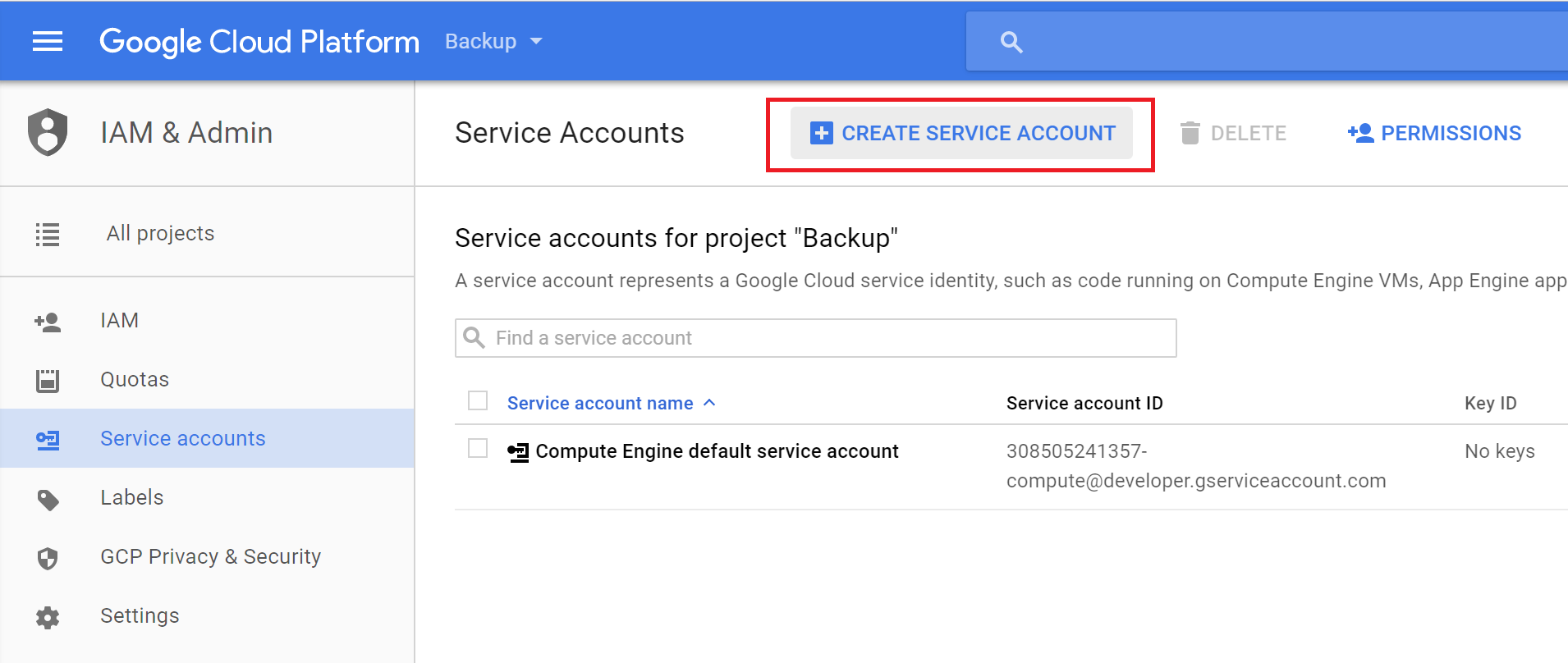

Click “Create Service Account”

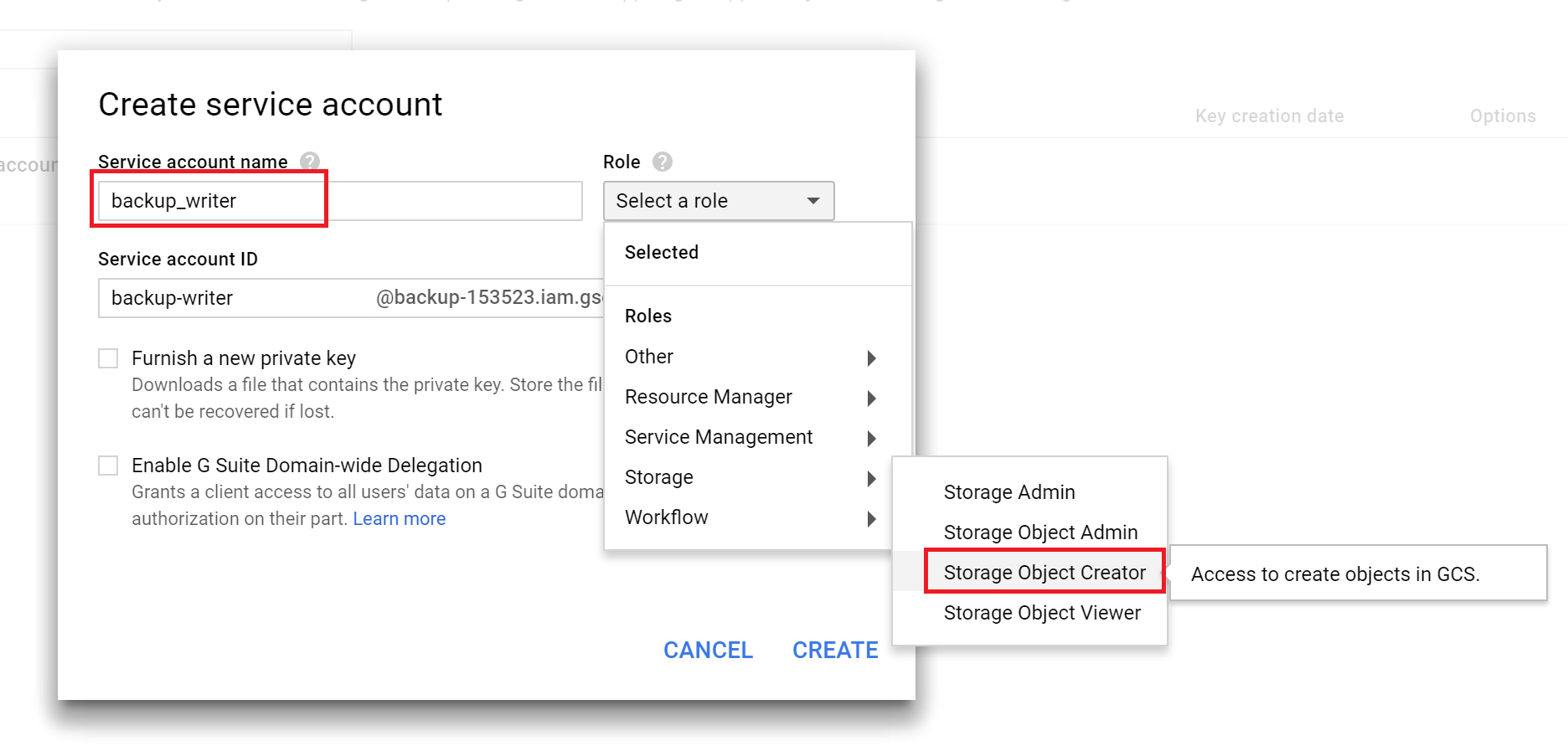

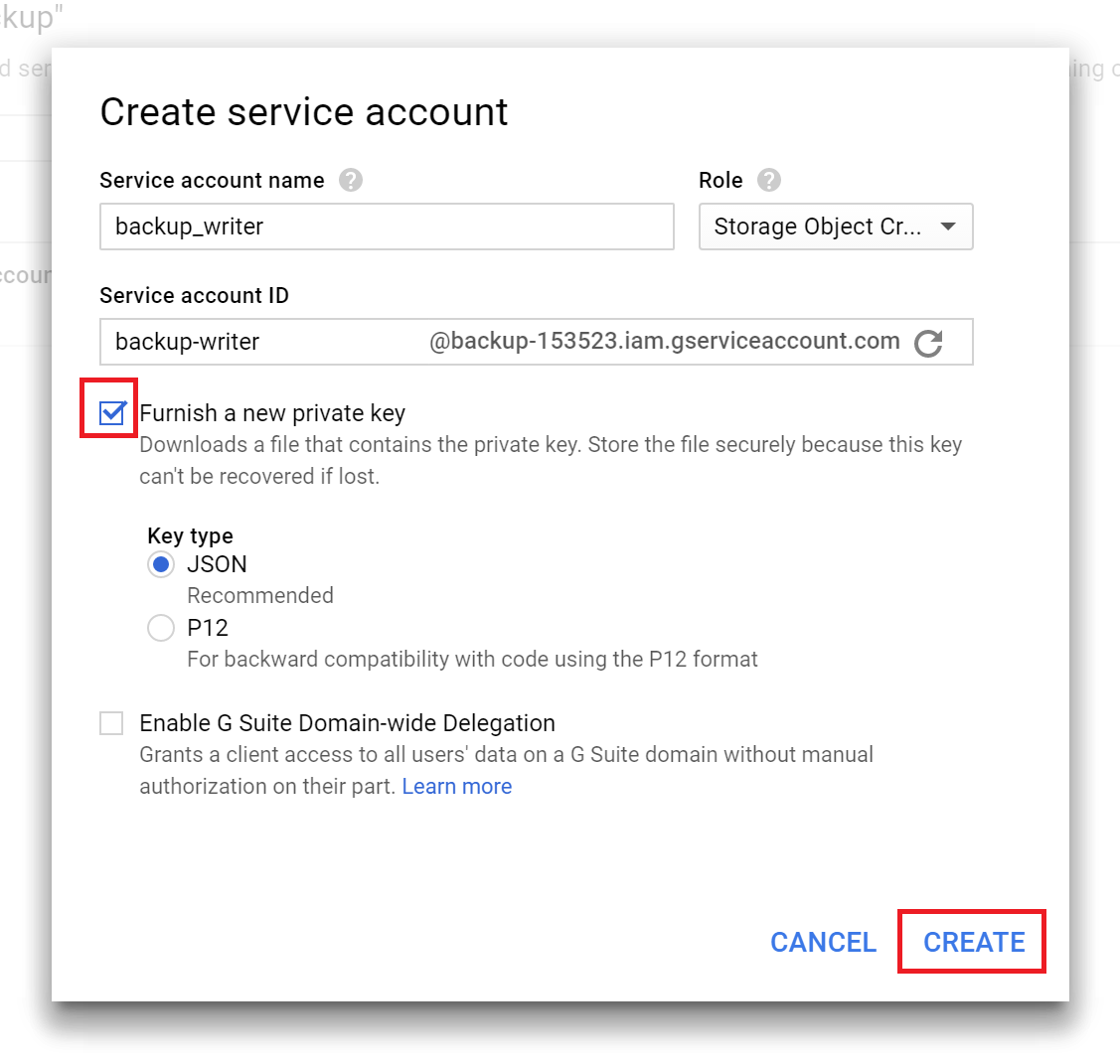

Name it (I chose “backup_writer”) and select the Role->Storage->Storage Object Creator.

Select the checkbox “Furnish a new private key” and click “Create”.

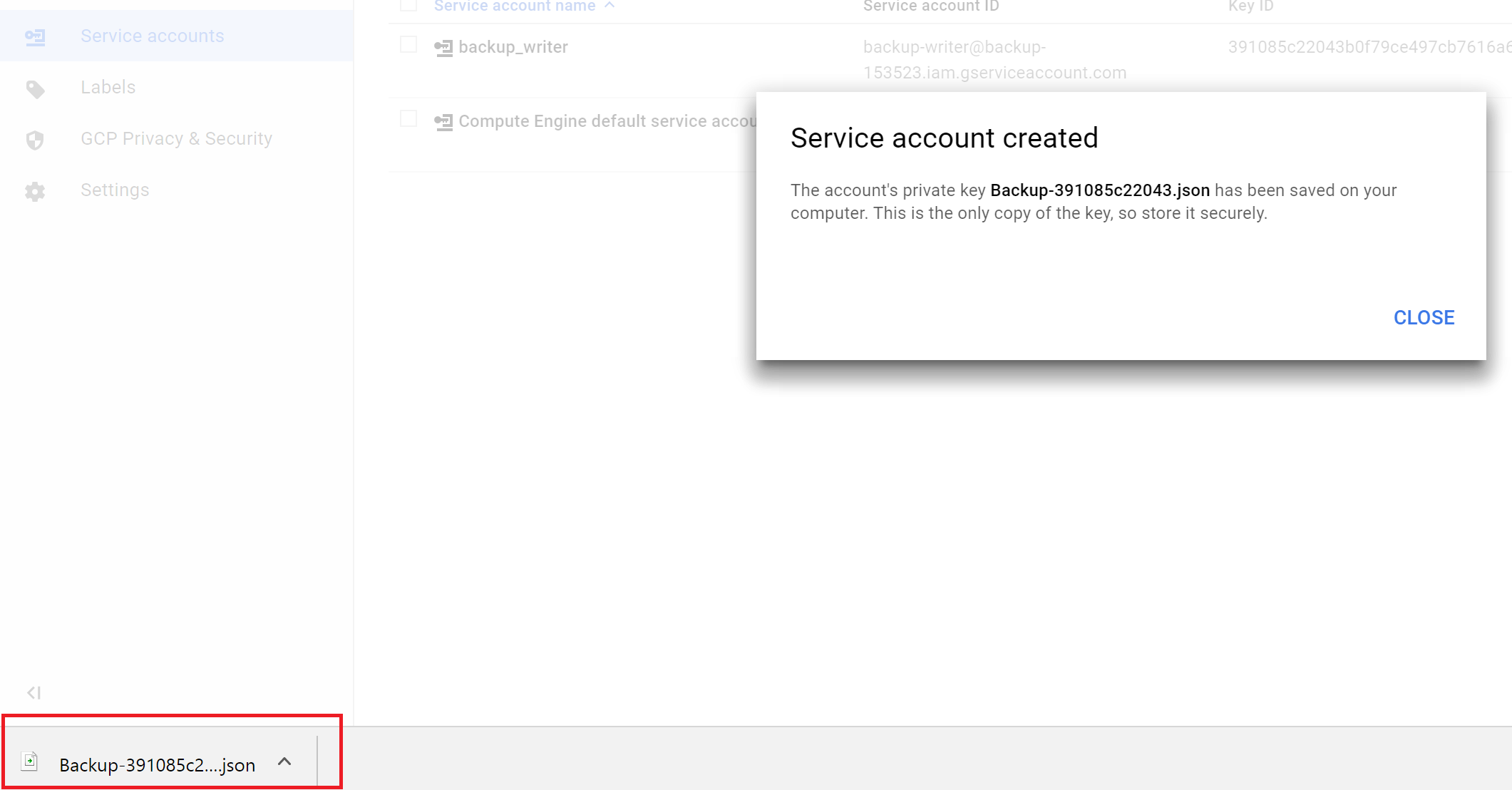

Credentials for this account are automatically downloaded as a json file when you create it.

Install the command line tools

On your server that will perform the backups, you’ll need to install Google Cloud’s command line tools.

As root:

# Add the package source

export CLOUD_SDK_REPO="cloud-sdk-$(lsb_release -c -s)"

echo "deb http://packages.cloud.google.com/apt $CLOUD_SDK_REPO main" | sudo tee /etc/apt/sources.list.d/google-cloud-sdk.list

# Add the Google Cloud public key

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

# Install the tools

apt-get update && sudo apt-get install google-cloud-sdk

Setup your credentials

Copy your json credential file that you downloaded previously to this system, and configure the gcloud tools with them:

gcloud auth activate-service-account --key-file=Backup-391085c22043.json

You can now delete that file.

Send files

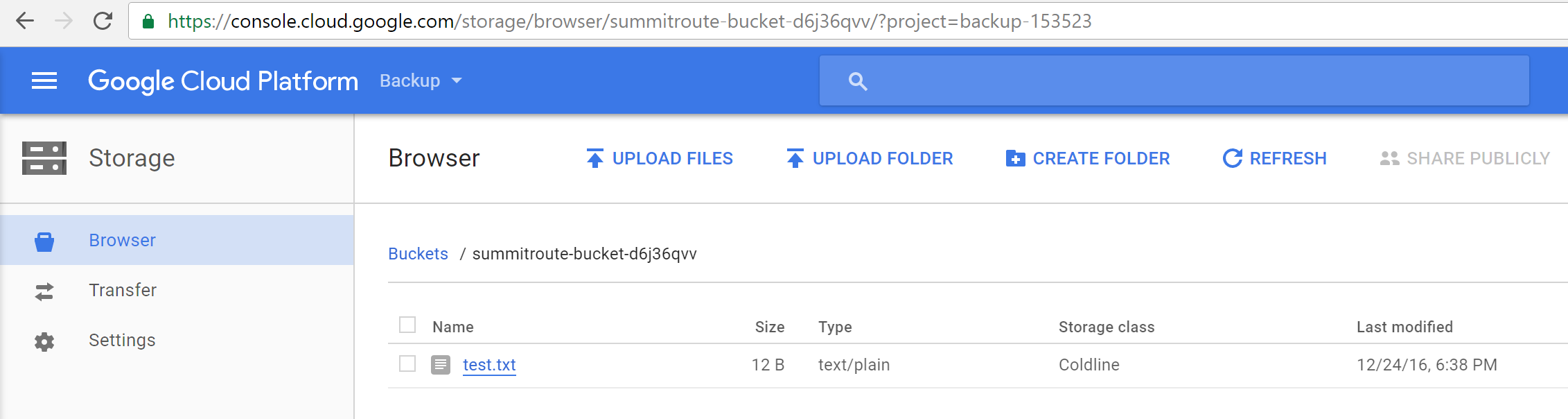

Now that you have a bucket and tools configured to be able to access it, try sending files to it:

echo hello world > test.txt

gsutil cp test.txt gs://summitroute-bucket-d6j36qvv/

You should be able to download this file in your browser when logged in.

Confirm your access policies are correct

Try to over-write your file:

# Attempting to over-write your file should return: "AccessDeniedException: 403 Forbidden"

gsutil cp test.txt gs://summitroute-bucket-d6j36qvv/

Conclusion

You now have the ability to send your backups to another cloud service. As mentioned at the start of the article, your backup account does unfortunately still have read permissions on your backups (and possibly more?), but this is sufficient for most disaster recovery concerns.