In giving training to companies on their AWS security, I advise they have backups. There was an infamous breach of a company named CodeSpaces that ran on AWS who had all of their data deleted by an attacker and the company had to shutdown within hours of the breach. I didn’t have my own house in order as well as I would have liked, so this week I set up automated backups, and did so in a generalized way that others can use via my cdk app backup_runner.

My business relies on Google Workspaces (previously known as G Suite) for email and Google Drive. My account is part of the Advanced Protection Program, but in a worst case scenario an attacker that obtained code execution on my laptop could wipe/ransom this data. I don’t know how Google would handle such an incident, but other scenarios also exist, however unlikely, that make me want to have backups of this data. My business is only myself, so using a featureful vendor solution isn’t needed, and it is nice to avoid some third-party risk of a vendor having access to all my email and company data, so I setup backups myself in AWS.

Tools used

I used got-your-back and rclone for the email and drive backups respectively. Got-your-back is made by Jay Lee, a manager at Google for Enterprise support, and the creator of GAM which is the de facto standard for interacting with the GSuite APIs. I could have used GAM for the drive backups, but it doesn’t maintain directory structure, which is important to me, so I turned to rclone.

Architecture

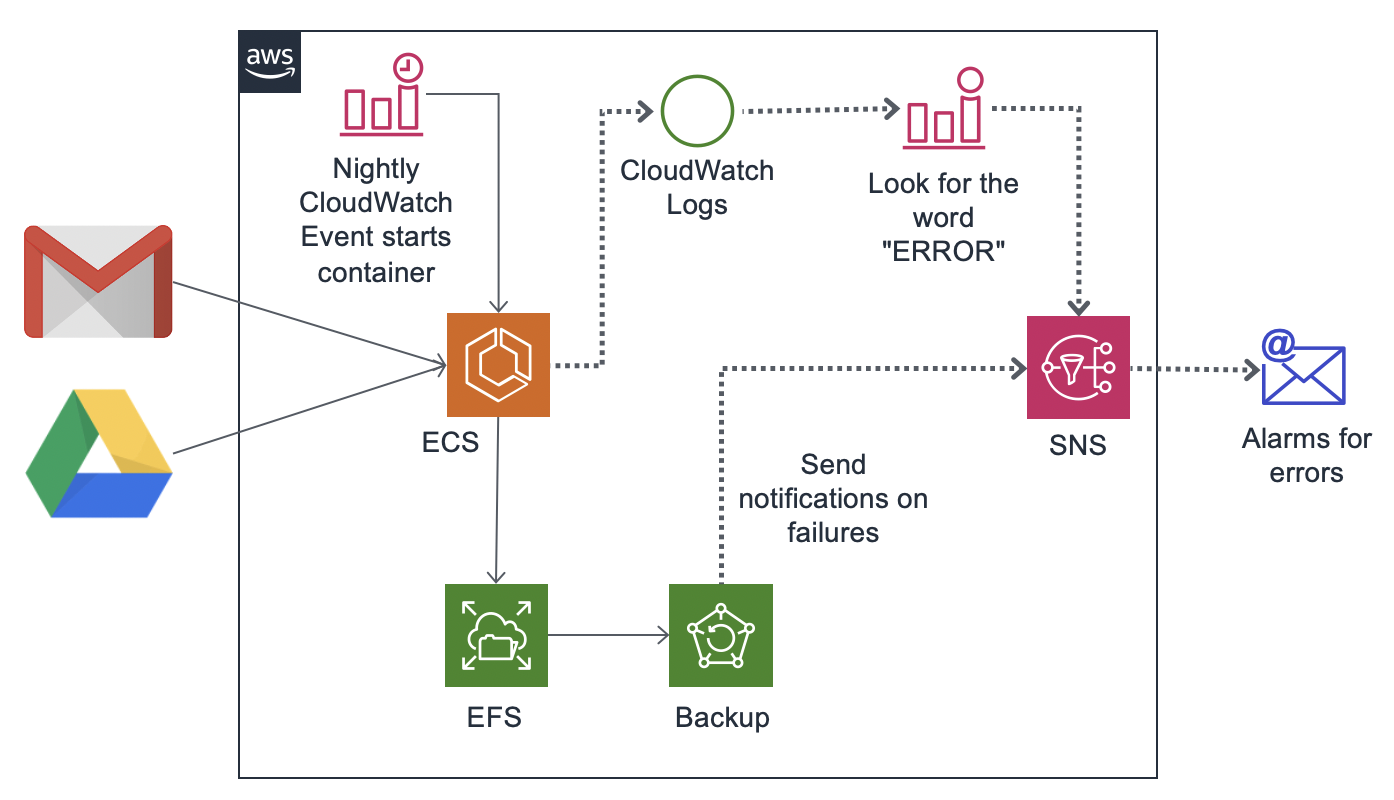

Historically, a number of folks have setup an EC2 and S3 bucket for this purpose, but I decided to run a nightly ECS container with an attached EFS, which itself is backed up via AWS Backup. The primary benefit of this architecture is AWS Backup takes care of incremental backups for me, meaning it only stores the changes each night, and makes it easy to recover the state of my data from any day in the past 35 days (this could be set longer).

The output of the script run by the ECS is recorded to CloudWatch Logs, where a CloudWatch Alarm monitors for the word “ERROR”. If found, the alarm is sent to an SNS that I’m subscribed to. The AWS Backup service will also generate events that are sent to the SNS under error conditions.

Security considerations

Encryption

AWS Backups are always encrypted. I configured my EFS to be encrypted at rest, and the access from the ECS is configured to use encryption in transit.

Business continuity

I am storing the backup Vault in the same account as the EFS as my primary motivation is to get the data copied to AWS. AWS did just release cross-account backups for AWS Backups, but my data already exists in two places (the live data in GSuite and the copy in the AWS account).

I did set the access policy on the Vault to restrict some ways of destroying the backups, but there seem to be limitations in the CDK for deploying an ideal policy here, so a privileged attacker could get around this by editing/deleting the policy. One thing that AWS Backup does not offer is the concept used by S3 Object Lock and Glacier Vault locking which enforces strong WORM (Write Once Read Many) controls.

Secrets management

As the EFS contains all my email and data, it seems reasonable to just put the secrets used to download this data directly on that EFS, as compromising those creds does not extend the access beyond what is already on the EFS.

Costs

By running a nightly ECS, I avoid some of the compute costs of running an EC2, but EFS costs $0.30/GB vs $0.023/GB for S3 (EFS is 13x more!). Files in the EFS should move to the less expensive Infrequent Access Storage tier after 7 days which is only $0.025/GB which is roughly the same cost as S3, but S3 itself has an infrequent access tier at only $0.0125, and can be made even less expensive by using Glacier Deep Archive at only $0.00099/GB. However, given that I have 30GB in GSuite, my costs should end up around $9/yr for using EFS (once most of the data moves to infrequent access), so I’m not going to waste time trying to shave pennies off that.

AWS Backup is $0.05/GB, and does have a cold-storage tier at $0.01/GB, but that requires storing your data for over 90 days, and I only store mine for 35 days. The backups are incremental, meaning it automatically figures out what the new files are and only charges me for those.

Having run this setup for a month, my total costs are looking to be about $50/yr.

Using S3 and its storage tiers would result in cost savings, but the time saved in having AWS Backup handle some of these backup concepts instead of me figuring them out is worth the expense.

Deploying

In order to setup this architecture I created a CDK app. The repo to clone is called backup_runner.

The ECS just calls a script named nightly.sh that it expects to find on the EFS. As a result, this app is very generalized for any backup solution, so it could easily backup Office365 or other data.

Once the CDK app is deployed, it won’t actually copy over any data to it, because again, it just runs a script. So in order to set this up you need to spin up an EC2 in the subnet where the EFS is , access a command-line in the EC2 (via SSH, EC2 Instance Connect, or Session Manager), attach the EFS to the EC2, and then setup got-your-back and rclone, or whatever else you had in mind. Setting those up is outside of the scope of this post, but it involves setting up some oauth creds. Be sure to copy the ~/bin that got-your-back creates and ~/.config that rclone creates to the EFS. You’ll want a larger instance, like a t3.large for got-your-back, and after setting this up and testing it, you can terminate that instance.

I then created my nightly.sh as:

#!/bin/bash

echo "Running email backup"

./bin/gyb/gyb --email scott@summitroute.com --action backup

if [ $? -ne 0 ]; then

echo "ERROR: Backing up email failed"

fi

echo "Running drive backup"

./rclone --config .config/rclone/rclone.conf --drive-acknowledge-abuse sync remote: drive

if [ $? -ne 0 ]; then

echo "ERROR: Backing up the google drive failed"

fi

echo "***Done***"

Testing and recovering

In order to kick off the ECS, you can kick off a task manually in the web console by selecting “Create new task” in the cluster screen. For Launch type selection “FARGATE” and for Platform version be sure to select “1.4.0” because otherwise ECS won’t know how to attach the EFS. Be sure to select the VPC that your EFS lives in. After the task is run, you should see the log messages in CloudWatch Logs, and from an attached EC2 you’ll be able to browse the files.

In the event where a recovery is needed, if the most recent data is acceptable, you can just spin up an EC2 and attach it to the EFS where you’ll be able to see all the files. Got-your-back and rclone do have restore commands, but you’ll likely have to modify the privileges for the credentials you have. As another option, and a way of testing the data has backed up correctly, Got-your-back writes out a lot of .eml files to paths based on the date. Tools like Thunderbird can understand these or you can cat them. Rclone copies your Google drive to the EFS making it easy to check the files.

With this setup, I feel more comfortable that I can revert to a previous state for my email or drive files.