Ransomware has become a primary concern for infosec. This post will discuss options for ensuring the durability of data stored on S3, through protections in place and backup strategies. The AWS backup service on AWS unfortunately does not backup S3 buckets and a lot of discussions of backups and data durability on AWS do not describe the implementation in sufficient detail, which allows a number of potential dangers. This post will show you the two best options (s3 object locks and replication policies), explains how to use these, and what to watch out for.

Ransom attacks in AWS?

Wherever critical data lives, ransomware attackers will go. Although I’m not currently aware of ransomware groups that specifically targets AWS environments or S3, I have heard of ransom based attacks in AWS, along with scorched earth attacks where attackers just deleted all of the resources including data in the S3 buckets in accounts they compromised or in S3 buckets they were able to access. One of the most infamous breaches of an AWS account, the Code Spaces breach back in 2014, involved a decision not to pay a ransom, the deletion of everything in an AWS account, and the subsequent shutdown of the business within 12 hours of the breach as all data (and backups) had been destroyed.

On March 26, AWS added an inline policy to one of my IAM users (for flaws.cloud) that they believed was compromised by adding a deny on s3:*, and on April 21, AWS released a new IAM managed policy named AWSCompromisedKeyQuarantineV2 which included a deny on s3:DeleteObject and similar actions that would be used by an attacker to delete data. Both of those moves by AWS hint at some sort of attack they saw.

Spencer Gietzen, who sadly passed away earlier this year, had published research in 2019 in his posts S3 Ransomware Part 1: Attack Vector and Part 2: Prevention and Defense, and presentation Ransom in the Cloud. Since that time, there have been some improved mitigations, that I’ll discuss.

The threat and constraints I’m considering

The threat I consider is having an attacker obtain admin access to an account that contains critical data in an S3 bucket. At one end of the spectrum, this could simply be a legit admin accidentally running an aggressive script that deletes the wrong S3 bucket, and at the other end this could be a sophisticated attacker that knows their way around an AWS environment and the potential mistakes that could be made in attempting to ensure data durability.

Even if you have many controls in place, ransomware groups are making enough money (supposedly over $100M/yr for REvil) that there is a possibility of them buying effective 0-days to target your AWS admins or IT staff (that likely has the ability to do things like password resets on your AWS admins or grant themselves privileged access to your AWS accounts). So we want to ensure our controls are as good as they can be.

S3 has 99.999999999% data durability of objects over a given year, and stores copies of your data redundantly across 3 AZs, meaning that even if two entire AZs in a region get destroyed at the same time, your data will persist. So we’re not concerned with a hard-drive failure at AWS resulting in our data loss. Of interest on this topic though, be aware that AWS has stated they store over 100 trillion objects in S3, so the way the math works out, this means they lose 1,000 S3 objects per year. At scale, rare events aren’t rare. Unfortunately I’ve never heard of what this looks like when it happens (does AWS notify you? Return an error when you try reading it? Return a corrupted object? Not show it listed anymore?).

For our solution, we want to maintain the existing usability of the S3 bucket as much as possible, meaning we still want the ability to modify and delete objects in this bucket, but we may wish to recover to an earlier time. For this reason, MFA delete is not an acceptable solution, because to delete an object you must use the AWS root account with an MFA device and provide the MFA code for every object deletion.

We want an RPO (Recovery Point Objective) on the order of 15 minutes (meaning we are willing to lose up to the most recent 15 minutes worth of data in an incident) and RTO (Recovery Time Objective) of a day (meaning we accept that it may take 24 hours to recover from an incident). Understand that if you want to improve those numbers, you may need to dramatically re-architect for a better solution.

We want to minimize costs. This mostly means we want to avoid having too many copies of our data and may want to use less expensive storage classes for any backups. Copying all our data to Snowballs everynight and shipping them to different physical locations is thus not acceptable as this minimally will cost $9K/mo to cover the $300/Snowball cost, but you’ll additionally have data transfer of $0.03/GB (which is more than normal S3 charges) and shipping fees. This also will not meet our RPO and RTO goals.

We assume that the need to recover is apparent when an incident happens. If an attacker has corrupted only a single file, and then waits a year to demand a ransom to tell you which file changed, it may be very difficult to detect and is out of scope of this article.

What about cross-region backups?

One concept you’ll often hear about for data durability is to backup your data to another region, but there are some issues with this:

- Copying your data to another region incurs data transfer costs. It costs nearly as much for S3 to replicate your data to another region ($0.02/GB) as it does to store a GB in S3 for a month ($0.023), with some regional differences. Therefore if you backup your data to S3 standard in another region, you’ve nearly tripled your cost of that data. Attempting to use less expensive storage classes to save money will not work how you might expect as will be discussed later.

- The possible situations that would result in the permanent loss of 3 AZs simultaneously may mean a physical disaster of significant enough impact that your business continuity may be the least of your worries.

- If you’re concerned about risks that could impact an entire AWS region, your concerns are likely better addressed by backing up your data to another cloud provider in another region as well. Going multi-cloud for your backup strategy would ultimately address more risks more effectively than going multi-region within AWS.

You may need to backup to another region for compliance reasons though, and the ideas I propose will still be useful for that scenario.

Protecting the data in place

Least privilege

You should always strive to ensure least privileged access to your AWS accounts and avoid situations where an attacker would have access to your critical data. Putting your critical data in a dedicated AWS account is helpful for accomplishing this, especially if privileged users are regularly accessing your production account.

Restricting access to your S3 buckets can be further enhanced via VPC endpoints to only allow access from specific VPCs. This can help ensure that even if an admin user makes a terrible mistake or is compromised, they will not be able to impact the critical S3 buckets unless they do so from the allowed VPC.

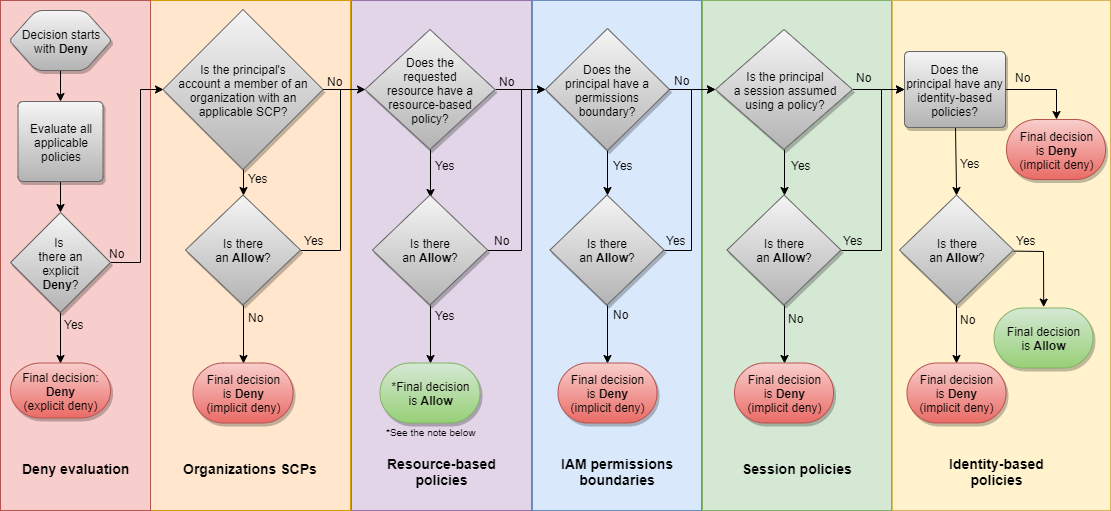

When working with S3 buckets, you need to be very aware of how resource policies can grant access to principles even if that principle does not have associated IAM policies that have granted them access. The following graph warrants its own blog post, but the critical take-away is there are two green end states, meaning two ways you can grant someone access to a resource.

To explain this concept, a common issue I’ve seen is that an S3 bucket will have a statement similar to the following in its resource policy:

{

"Sid": "DO_NOT_DO_THIS",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::bucket",

"arn:aws:s3:::bucket/*"

],

"Condition": {

"StringEquals": {

"aws:PrincipalOrgID": "o-abcd1234"

}

}

}

The person who set this policy wanted to make sure that it was possible for others in the organization to be able to access this bucket, but did not realize that they are actually granting access, especially to those within the same account as the bucket. When I would do assessments, I would be given SecurityAudit privileges, which should not allow me to read or write sensitive data, but because of this resource policy, myself and other vendors that had least privilege roles in the account would actually be given full access to that S3 bucket!

Random naming

As part of least privilege you should avoid giving the ability to list the S3 buckets in the account (s3:ListAllMyBuckets) or the objects within those buckets (s3:ListBucket). But let’s say you give only s3:PutObject for something that produces a critical set of logs each day that are named 2021-01-01.log, 2021-01-02.log, etc. If someone compromises that service and knows the naming pattern, they could overwrite these log files. There is not a way on AWS to give permissions to write an object, but not overwrite existing objects (at least not in the way most want, but object versioning discussed below helps with this). It would be better to name the files with something random, such as 2021-01-01-fdc1127f-7a1d-4721-a081-a66a536649ae.log. You’ll still be able to identify when this log was created, but an attacker would not be able to blindly overwrite it.

The foundations of our durability options

S3 object versioning

Many of the strategies to be discussed for data durability require S3 object versioning to be enabled for the bucket (this includes S3 object locks and replication policies). With object versioning, anytime an object is modified, it results in a new version, and when the object is deleted, it only results in the object being given a delete marker. This allows an object to be recovered if it has been overwritten or marked for deletion. However, it is still possible for someone with sufficient privileges to permanently delete all objects and their versions, so this alone is not sufficient.

When using object versioning, deleting old versions permanently is done with the call s3:DeleteObjectVersion, as opposed to the usual s3:DeleteObject, which means that you can apply least privilege restrictions to deny someone from deleting the old versions. This can help mitigate some issues, but you should still do more to ensure data durability.

Life cycle policies

Old versions of objects will stick around forever, and each version is an entire object, not a diff of the previous version. So if you have a 100MB file that you change frequently, you’ll have many copies of this entire file. AWS acknowledges in the documentation “you might have one or more objects in the bucket for which there are millions of versions”. In order to reduce the number of old versions, you use lifecycle policies.

If you are constantly updating the same objects multiple times per day, you may need a different solution to avoid unwanted costs.

Storage classes

With lifecycle policies, you have the ability to transition objects to less expensive storage classes. Be aware that there are many constraints, specifically around the size of the object and how long you have to keep it before transitioning or deleting it. Objects in the S3 Standard storage class must be kept there for at least 30 days until they can be transitioned. Further, once an object is in the S3 Intelligent-Tiering, S3 Standard-IA, and S3 One Zone-IA, those objects must be kept there for 30 days before deletion. Objects in Glacier must be kept for 90 days before deleting, and objects in Glacier Deep Archive must be kept for 180 days. So if you had plans of immediately transitioning all non-current object versions to Glacier Deep Archive to save money, and then deleting them after 30 days, you will not be able to.

S3 object locks

S3 object locks are a powerful feature that allows you to ensure that an S3 object cannot be deleted until a set date, no matter what. This is great for our data durability, but this can also be potentially dangerous because an attacker could sync in a lot of large files from a public S3 bucket, and then lock these files for up to 100 years, and by design, not even AWS can undo this. The only way to stop paying for locked files that you don’t care about is to have AWS delete the account, which first means transitioning all your resources (except these locked files) to another account, which is often a big lift. To help mitigate this, the AWS documentation has a statement that you can apply to your bucket policy, or as an SCP, that restricts people from locking objects for too long.

{

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObjectRetention",

"Resource": "arn:aws:s3:::bucket/*",

"Condition": {

"NumericGreaterThan": {

"s3:object-lock-remaining-retention-days": "30"

}

}

}

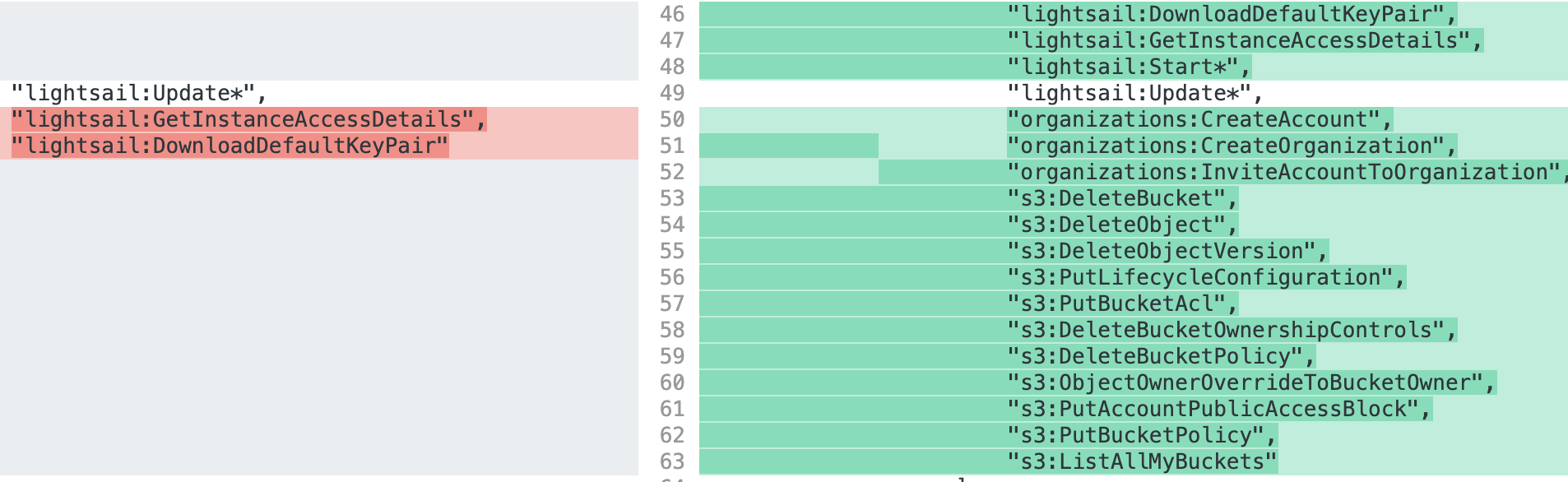

Ian McKay put together a great list of privileges you should disable at the SCP level to limit some Denial of Wallet attacks and mistakes, such as someone using object locks (s3:PutObjectRetention) or setting a default object lock policy (s3:PutBucketObjectLockConfiguration). Denying the privilege s3:PutBucketObjectLockConfiguration will prevent someone from creating an S3 bucket that has S3 object lock enabled, but if one already exists, and the attacker has sufficient privileges, even with the above SCPs in place, the attacker could change the bucket policy to allow access from their attacker account, and because SCPs do not apply to principals in external accounts, the attacker could then fill the bucket up with objects that are locked for the next 100 years.

Despite the above concern, S3 object lock is the most powerful feature on AWS for ensuring your data cannot be deleted. You can also apply a default object lock retention period to an S3 bucket which means how you interact with the S3 bucket will not change. Objects can still be marked for deletion as happens normally with S3 object versioning, but the object cannot be deleted until the lock expiration date happens.

The problem I have with S3 object lock from a data durability perspective, is there isn’t a concept of a rolling lock. I want to say “Allow objects to be marked for deletion, but don’t allow them to be permanently deleted for 30 days after that”. To explain this, imagine you have an object lock configuration that locks your objects for 30 days. You then put some critical objects in it. If your account is breached on day 1, the attacker will not be able to permanently delete these objects. If the attacker breaches the account on day 31 with sufficient privileges, they can wipe out all your critical data that you had put there on day 1.

One possible solution is to run a nightly process to iterate through all your objects and put an object lock on the latest object versions. This can be done with the S3 Batch service as documented here.

Replication policies

The classic approach to data durability is to create backups and ensure the backups are separate from the primary data. In AWS, in order to have a strong security boundary, this must be done in a separate AWS account. Ideally, this would be completely separate from your other accounts (separate AWS organization, not accessed through your normal SSO process, accessed only with special unmanaged laptops, etc.), but there are good arguments for not going to this extreme, especially because it often means attempting to duplicate many of your security controls. Another important concern is that you will likely have multiple sets of critical data that have potentially been strongly isolated, all being backed up to the same AWS account. In doing so, the backup account becomes an attractive target full of sensitive data.

- The same people have access to both the primary data and backup data.

- The IT or help team has the ability to reset credentials or deploy software updates to the people with access to the backup data, or to add themselves to the groups that have access.

- Any cross-account AWS roles that grant write or delete access into the backup account from other accounts.

- Monitoring or security controls on the backup account are not aligned with how other accounts are secured.

The easiest way to setup S3 backups is through replication policies, which can copy objects to an S3 bucket in a different account in the same region or a different region. To do this, you need to:

- Create an IAM role that will replicate this data.

- Set the bucket resource policy in the target bucket in the backup account to allow the source account to send data to it, as documented here.

- Set replication policy on the source bucket in primary account.

- Ensure delete markers are replicated.

- Include changing the replica owner to the policy.

- Set a less expensive storage class for the replicated data.

- Optional: Ensure Replication Time Control is enabled to ensure the objects are replicated within 15 minutes for 99.99% of objects.

- Set lifecycle polices on the source and destination buckets to clean out the non-latest version of objects.

- Optional: Monitor notification events for replication failures.

Recovery

In the event that an attacker has compromised and destroyed your primary data, your backup bucket will have deletion markers on it that you’ll need to remove. You’ll then need to copy your data back from your backup bucket to the primary bucket. Be mindful in how you do this that you do not enable an attacker that may still be around to have write access to your backups. AWS is not clear on what the fastest method is for this, but I think AWS DataSync may be the best solution. You should work with your TAM or AWS support on this recovery process. A problem with many ransomware cases I’ve heard is that recovering from backups takes longer than just paying the ransom and recovering that way, but I don’t think this would be the case for data stored in S3.

Further considerations

With object locking and replication policies, when these are applied to an existing S3 bucket, the objects that already exist in the S3 bucket will not be impacted. So if you have an existing S3 with critical data in it, you must take actions to replicate or lock the existing files. AWS support can assist with replicating files and enabling object locking on existing S3 buckets.

Detections

You should setup monitoring to detect and alert on the following:

- Detect

s3:PutBucketLifecycleConfigurationas this can be used to delete objects. Mark it has high severity if it is less than some amount, say 30 days, or when it is not on the non-current object version. - Detect

s3:PutBucketPolicycalls on any critical S3 buckets. - For data events:

- Detect calls to

s3:DeleteObjectVersion. - Detect access denied calls to

s3:DeleteObject

- Detect calls to

- Detect the creation of any S3 bucket with object locking enabled and auto-remediate.

Cloudtrail data events can get expensive (both to record and to monitor), but you can use advanced event selectors to look for specific object level APIs and filter on specific S3 buckets.

Conclusion

Ensuring S3 data cannot be deleted by an attacker is not entirely trivial, but hopefully this guide has explained the better options (s3 object locking and replication policies) and pointed out some common problems to watch out for.