A defender’s job is not only to stop bad things from happening, but also to detect issues as they occur so mitigating actions can be taken before they become bad (or worse). Alerts are how you do this.

This post gives advice on using alerts for security purposes. Much of this also relates to alerts for DevOps.

I use the word “alert” to mean either the notification message that results from something searching logs, or the rule that defines the logic used to search logs and causes this notification. For example, I find it valid to say “I received an alert from the alert I wrote” which would mean “I received a notification from the rule I wrote.” I’ve tried to clarify their uses.

Collect logs. Create alerts.

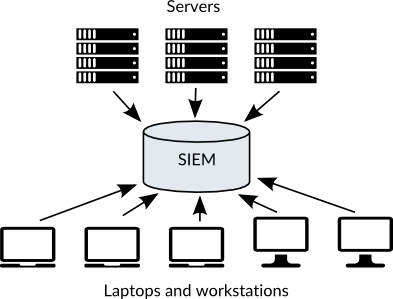

Send your logs to a central location. This is important both to ensure the integrity of the logs (so the attacker on a host doesn’t wipe the logs), and to make them easier to work with than having to ssh, grep, and less through them somewhere. An ELK stack with ElastAlert, as I described in my post Iterative Defense Architecture will do fine. This collection and alerting system is your SIEM.

Secure your logs, their transfer to your SIEM, their access, and your alert messages at the same level of security as the contents of the data you’re capturing. Avoid logging secrets like credit card numbers or passwords. OneLogin had an incident in August 2016 where it was accidentally logging passwords before hashing them to store in their database. You may need to have multiple SIEMs to ensure segmentation of privileged access.

Secure your alert rules. If an attacker gets access to your git repo that stores what all the rules are for what you alert on, they can avoid being detected.

Make sure your logs include the time, host, and what created the log message. Make sure your timestamp format is standardized and your hosts’ clocks are sync’d.

Improve your signal to noise ratio

Logs are a flood of noise. Your goal is to turn that noise into information, and alerts are the tool you use to accomplish this. Improving the signal to noise ratio on alerts is a constant battle. The goal is to receive an actionable alert notification for every problem without missing anything and with no false positives. You will never perfectly obtain this goal.

You will accidentally flood yourself with alert notifications (many times) as you create and tune your rules. Test the alert rules before actually turning them on, by identifying how many times it would have fired on past data before you create it. Sometimes things will break or be changed in your environment and this will fire hundreds (thousands? millions?) of alert notifications. Be ready to turn off your alerts when a flood happens, do a bulk dismiss of the relevant alerts messages, and turn your alerting back on, hopefully with it catching up on things that happened while it was off without flooding you again.

If you’re not getting alerts for problems that arise, then you aren’t alerting on enough things and your alert rules are too tight. If you’re being flooded regularly with alert notifications and missing important things in the flood, then your alert rules are too loose. You could of course also get too many alert notifications because too many things are going wrong. In this case, figure out ways to stop the bleeding and prioritize. You may also need to aggregate your alert notifications (more on this later).

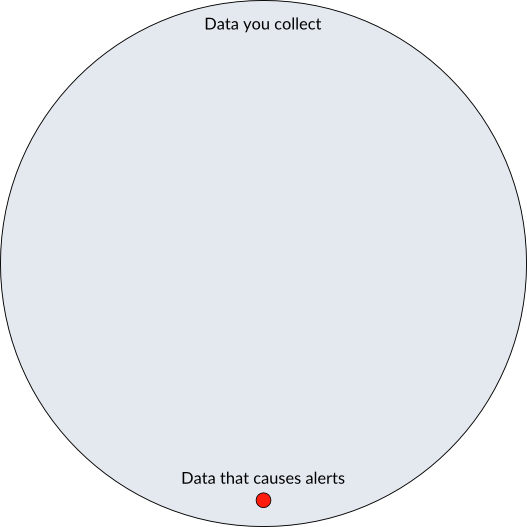

Alert notifications should be on a fraction of the data collected. You should not collect only data that you know is bad. By this, I mean avoid putting any alert logic on your hosts. The problems with this are:

- It lets the attacker know what you look for if he compromises that host.

- You won’t be able to search through historical data for malicious things you didn’t know about until recently.

- You will lack context when you need to investigate.

Make your alerts relevant

At their most basic, alert rules can usually be thought of as simply grep commands. Make your alerting more intelligent than grep.

Have your alert rules call code to get more information. For example, AWS Cloudtrail logs will tell you a change has been made to Security Group sg-123456. If you alert on that in some way, you will want to report what the human readable name is for that Security Group, which will require you to call an API.

Aggregate multiple alert notifications into a single alert or only alert on the first event. For example, if you alert on every beacon to a malicious site, and you have an infection that beacons home every 5 minutes, then you will get nearly 300 alerts per day for that one system, when all you should have is a single alert saying that system is infected.

Make your alerts actionable

There is little point in alerting on something you can’t take action on. We had security monitoring running on our guest wifi network once, and it detected a callback to a C&C domain. Not only did we not know what device was infected, but even if we knew, we wouldn’t be able to do much to remediate it. All we could do would be tell the person and/or block it on our network, neither of which ensures the issue will be resolved.

Make sure you collect enough information to be able to investigate. Make your alert messages contain the important information. It’s annoying to have to dig through the logs to just to understand what the alert is about, for example when instead of a hostname you just have an IP address. Even worse is when you can’t even figure out why the alert fired or what it is about, because all it says is Problem with host '<null>'. Make sure you can identify the host involved, and provide a better description of why the alert fired. Have your alert link to the specific log message that caused it, and remember to ensure your logging provides enough information to trace down where it came from and when.

Have runbooks for your alerts

Alerts should describe why this event needs investigation and how. Someone else might respond to your alerts, or your future self a year from now at 4am might respond.

Good defenders document their alert rules.

You should have runbooks for your alerts to explain the possible significance of this alert. For example, a flatline alert for logs should explain that not only could this be due to the server being down or a firewall rule accidentally blocking incoming logs, but it could also be due to an attacker disabling logging. The runbook should describe how to diagnose the possible causes and actions. I assure you that when your new team member ends up having to respond to an alert while you’re on vacation, and the world around them is burning, they will be grateful for a step-by-step guide on what they need to do.

You may want to have an ID with your alert message so you can find the rule that created the alert. This will be needed when you end up with multiple ways of checking for things that result in similar alert messages.

Store your alert rules in git or something that can track changes so you know who created the alert rule and when.

Test your alert rules

A large number of your alert rules will never fire. These are checks for bad things, and hopefully bad things don’t happen. This situation is troublesome though because you need to ensure that the alert rules you have that never fire actually work. As time goes on, you will unfortunately find alert rules that don’t work, so review your alert rules quarterly and make tests for your alert rules.

Ideally, alert rules should be created like code, with unit tests. Likely though you will need to provide directions for manual actions to get them to fire. You shouldn’t just create an alert rule without testing that it actually will do what you want. Capture and document an example of the log message that this alert rule was created for.

Have alert levels

You should have varying levels of alerts depending on the likelihood of occurrence, possible severity, and timeliness of action required.

| Alert level | Alert communication | Response time |

|---|---|---|

| Informational | Log a message to a chat room. | No response needed |

| Warning | Create a ticket. | Take action within 24 hours. |

| Critical | Page you. | Take action within 10 minutes. |

An alert that creates a ticket, should also log a message to your chat room. A page should create a ticket and log the message.

It’s important to create tickets for alert notifications so they actually get resolved. Otherwise, you’re going to end up getting an alert for something, ignore it as you’re busy on something else, forget about it, and a month later realize something bad happened because you never got back to that alert you forgot about. If the alert isn’t important enough to be put on your todo list, then it probably should only be informational.

The action you take might only mean you ack it and begin investigating, or you can resolve it because no action is needed. The majority of your alert notifications should go off during work hours as people are doing work. For example, you might have a flatline alert rule that goes off when logs stop being received from a system, and logs stopped coming in from that system because it was replaced with a new system.

Tune your alert notifications to the relevant people. Pages in the middle of the night should only go to one or two people, and escalate from there if they don’t ack within a few minutes. Getting a page for someone else’s problem is annoying. You should have multiple chat rooms for different alert notifications.

The retailer Target ignored their alerts prior to their hack because they supposedly were getting millions per day. Those aren’t alerts. Those are logs. You should see less than a hundred alert notifications per day, of which less than 10 need to be acted on. Critical alert notifications should only average about one event per week where you end up with a couple of alerts firing at once.

Make alert writing part of your processes

Write alert rules whenever you introduce a new service into your environment. As part of your purchasing decisions you should make sure any service you use has logs you can ingest. Collect logs and create alert rules from those logs. This is important for not only on-prem solutions, but also cloud solutions.

Don’t create alert rules solely based on the data you have. Identify what you want to alert on, and then try to make sure you have logs that will allow you to create those alerts.

Write alert rules when you find yourself making manual checks. A lot of the activities of “hunting” should be turned into alerts. If you’re manually hunting logs for the same things daily/weekly/monthly, you should turn those hunts into automated alerts so you’re notified of them immediately when they happen as opposed to whenever you have free time to “hunt” for them.

Write alert rules as part of your post-mortem process for any incident. Consider what alert rules could have better detected aspects of the incident earlier. This doesn’t need to be for just major incidents. This can be for little things you discover that have no immediate impact, but if coupled with other issues could be bad.

Automate alert remediation

To reduce alert fatigue and the number of steps you need to take when you see an alert notification, consider how your response can be automated.

Examples:

- A malware alert on a laptop automatically resets all the employee’s passwords and creates a ticket for your helpdesk to collect their laptop for forensic analysis. Or alternatively, if you’re worried about false positives, then it just remotely kicks off some file collection for you to analyze further.

- A malware alert on your server results in shutting down your entire site until you can investigate. This may not be appropriate for a business that requires high availability, but might be for a banking website. If you run a bitcoin exchange you’re crazy not to automate shutting down your whole site when something odd is detected.

- For sensitive actions, I love Slack’s security team’s technique of automatically asking the employee if that was really them and making them ack it with 2FA (link).

Create different types of alerts

There are all sorts of reasons to create alerts, that can indicate all sorts of issues. Many alerts can fall under multiple categories. Here are some examples:

| Categories | Example |

|---|---|

| Operations |

|

| Known bads |

|

| Rare, dangerous actions |

|

| Violations of best practices or guidelines |

|

| Deception traps |

|

| Insanity checks | These are things that should never happen. If it does, it violates your assumptions of the world, and something has gone terribly wrong.

|

Understand the context of your alerts. Different alerts make sense in different places. Detecting an nmap scan on your public servers is pointless, as you would be flooded with alert notifications. Detecting it inside your corp network is excellent.

Useful is better than non-existent. It might not be possible to perfectly detect every possible configuration of an nmap scan, but in your internal network you could detect large numbers of attempted connections to different servers from a single end-point, or you could simply have a canary service running and if anything connects to it or just throws a packet at it, alert.

Choose to block, alert, both, or neither

For any potential badness, you may wish to block and alert, only block, only alert, or neither.

| Block | Allow | |

|---|---|---|

| Alert | SSH attempt with failed 2FA | SSH attempt from never before used IP within the US |

| Ignore | SSH attempt from non-US IP | Normal SSH attempt from the main office |

There have been attempts in the past at having patches alert on attempted exploitation. So the patch fixes the vulnerability (blocks the exploit), but then also alerts if the vulnerability is ever attempted to be exploited. I wish this was more common.

Sometimes it’s easier to make an alert than it is to block something, so don’t trap yourself spending too much time trying to block unlikely things with minimal impact. For example, maybe your employees should always be using Google Chrome. You could build something to detect and block if they ever use Internet Explorer, but it’s easier to just detect if they successfully authenticate to your internal site with that browser and alert on it so you can gently remind them not to do that.

Closing thoughts

Alerting is how you can be informed about the things on your network you want to know about so you can have some assurance that your network is secure. You can not prove your network is secure and not compromised, so you must attempt to continuously find examples that your network is insecure or compromised.

This is not a fun game, because when you win (you find an example) it means you lost (your network is insecure or compromised). If you find no examples, you can not assume everything is fine, but you can have more confidence than if you didn’t look. This situation is true of any risk based endeavor, and is why we have pre-flight checklists for airplanes and all the other due diligence and risk reduction steps in the world. The better your alerting becomes, the sooner you’ll be able to identify issues before they become major incidents.